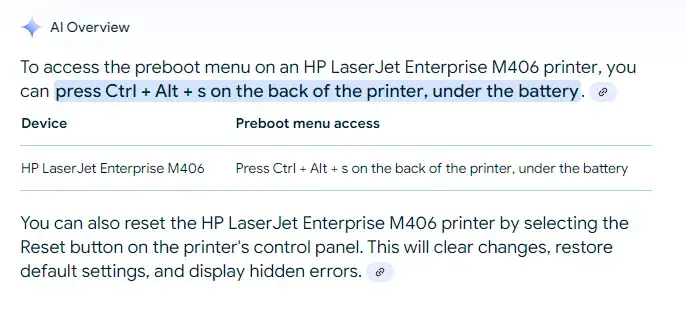

Probably should’ve just asked Wolfram Alpha

Not even moderately helpful for printer questions.

What, your printer doesn’t have a full keyboard under its battery? You’ve gotta get with the times my man.

It sounds like some weird ritual that someone scratched into a notebook.

𝗯𝗮𝗰𝗸 𝗼𝗳 𝗽𝗿𝗶𝗻𝘁𝗲𝗿?? under battery, m͟u͟s͟t͟ f͟i͟n͟d͟ k͟e͟y͟s͟

Wait did you edit this image? That’s some effort you’ve put in.

I actually sent a bunch of prompts through image generators till it gave something close to what I wanted

Using generative AI to try and visualize generative AI

one third plus one half of one third is one half.

I think thats an issue with AI, it has been so much trained on complex questions that now when you ask a simple one, it mistakes it for a complex one and answers it that way

The issue is it’s an LLM. It puts words in an order that’s statistically plausible but has no reasoning power.

It’s auto-complete. It knows that “4” is the most common substring to follow “2 + 2” in its training. It’s not actually doing addition.

LLMs are really fucking bad at math. They’re trying to find the statistical close answer, not doing computation. It’s rather mind-numbingly dumb.

Unfortunately a shockingly large number of people don’t get this… including my old boss who was running an AI-based startup 💀

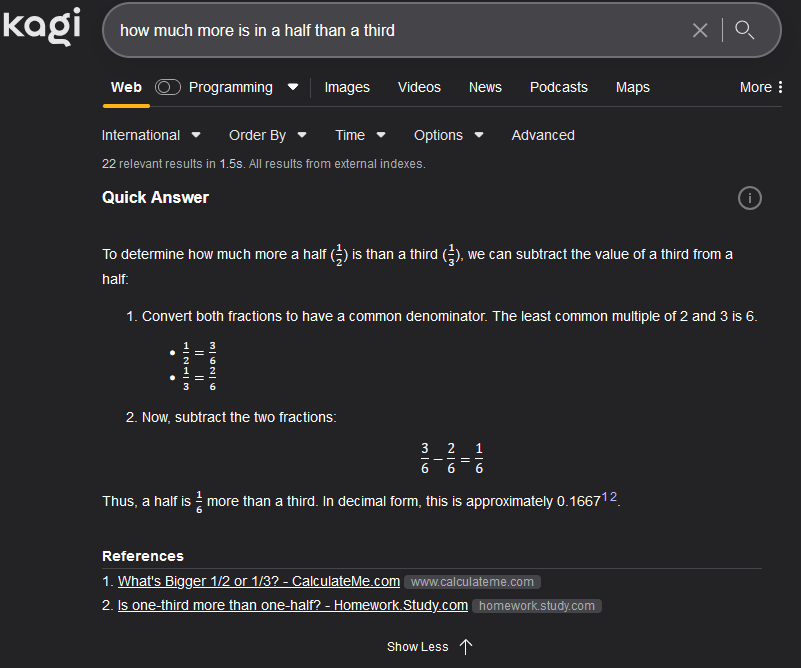

Google’s AI seems dumber than the rest, for example here’s Kagi answering the same (using Claude):

edit: typoed question originallyPerhaps Google’s tried to make it run too cheaply - Kagi’s one doesn’t run unless you ask for it, and as a paid product it’ll have different priorities.

There are two meanings being conflated here.

“1/3 more” can mean “+ 1/3” or "* (1 + 1/3)“.

So “1/3 more than 1/3” could be 2/3 or 4/9, but not 1/2.

Instead 1/2 is 1/2 more than 1/3, not 1/3 more. That’s the meme I’ve seen go around recently.

I read it as “A third of a third plus a third is a half.” Which makes sense to me. What an I missing?

It’s wrong.

1/3 + (1/3 * 1/3) = 3/9 + 1/9 = 4/9. It’s close though.However, one third plus one half of a third is correct.

1/3 + (1/2 * 1/3) = 1/3 + (1.5/3 * 1/3) = 1/3 + 0.5/3 = 1.5/3 = 1/2