Now that sounds interesting!

Now that sounds interesting!

Man alive, all that time I wasted learning LaTeX in that case. Supports tables properly, “floats” pictures and figures about without messing up the flow of text, exceptional support for equations, beautiful printed output…

Suffers from a completely insane macro-writing language, and its markup is more intrusive in the text than markdown’s is. Also, if you have very specific formatting output requirements (for a receiving publication, for instance) then it can be somewhat painful to whip into shape. Plain-text gang forever, though.

Your classic VGA setup will probably be connected to a CRT monitor, which among other things has zero lag, and therefore running your sound separately to your audio setup, which also has zero lag, will be fine. Audio and video are in sync.

HDMI cables will almost certainly be connected to a flatscreen of some kind. Monitors tend to have fairly low lag, but flatscreen TVs can be crazy. Some of them have “game” mode (or similar) but as for the rest, they might have half-a-second or more of image processing before actually displaying anything. Running sound separately will have a noticeable disconnect between audio and video; drives me crazy although some people don’t notice it. You would connect your audio setup to the TV rather than directly to source to correct this.

Now, the fact that a lot of cheap TVs only have a 3.5mm headphone jack to “send on the sound” is annoying to me, too. A lot of people just don’t care about how things sound and therefore it’s not a commercial priority. Optical digital audio output would be ideal, in that cheap audio circuitry inside the television won’t degrade the sound being passed over HDMI and you can use your own choice of DAC, but they can be both expensive and add a bit of lag as well.

TempleOS network edition.

Dunno why you’re being downvoted. If you’re wanting a somewhat right-wing, pro-establishment, slightly superficial take on the news, mixed in with lots of “celebrity” frippery, then the BBC have got you covered. Their chairmen have historically been a list of old Tories, but that has never stopped the Tory party of accusing their news of being “left leaning” when it’s blatantly not.

Memory safety is just a small part of infrastructure resilience. Rust doesn’t protect you from phishing attacks. Rust doesn’t protect you from weak passwords. Rust doesn’t protect you from network misconfiguration. (For that matter, Rust doesn’t protect you from some group of twenty-year old assholes installing their own servers inside your network, like you say.) Protecting your estate is not just about a programming language.

“Infrastructure”, to me, suggests power, water, oil and food, more than some random website. For US infra, I’m thinking a lot of Allen-Bradley programmable logic controllers, but probably a lot of Siemens and Mitsubishi stuff as well - things like these: https://www.rockwellautomation.com/en-us/products/hardware/allen-bradley/programmable-controllers.html.

Historically, the controllers for industrial infrastructure (from a single pumping station to critical electrical distribution) have been on their own separate networks, and so things like secure passwords and infrastructure updates haven’t been a priority. Some of these things have been running untouched for decades; thousands of people will have used the (often shared) credentials, which are very rarely updated or changed. The recent change is to demand more visibility and interaction; every SCADA (the main control computer used for interactive plant control) that you bring onto the public internet so that you can see what it’s up to in a central hub, the more opportunity you have to mess up the network security and allow undesirables in.

PLCs tend to be coded up in “ladder logic” and compiled to device-specific assembly language. It isn’t a programming environment where C has made any inroads over the decades; I very much doubt there’s a Rust compiler for some random microcontroller, and “supported by manufacturer” is critical for these industries.

Nicole the Polish girl from Toronto is my fediverse girlfriend, damnit. Get your own.

Tesla lost substantial ground in an EV market that was up 54% for the month

There’s a big increase in EV sales and Tesla are selling 59% fewer cars than they did last year. That’s a massive slump in their fraction of the market.

AI does give itself away over “longer” posts, and if the tool makes about an equal number of false positives to false negatives then it should even itself out in the long run. (I’d have liked more than 9K “tests” for it to average out, but even so.) If they had the edit history for the post, which they didn’t, then it’s more obvious. AI will either copy-paste the whole thing in in one go, or will generate a word at a time at a fairly constant rate. Humans will stop and think, go back and edit things, all of that.

I was asked to do some job interviews recently; the tech test had such an “animated playback”, and the difference between a human doing it legitimately and someone using AI to copy-paste the answer was surprisingly obvious. The tech test questions were nothing to do with the job role at hand and were causing us to select for the wrong candidates completely, but that’s more a problem with our HR being blindly in love with AI and “technical solutions to human problems”.

“Absolute certainty” is impossible, but balance of probabilities will do if you’re just wanting an estimate like they have here.

Bless her. If someone that really ‘loves and appreciates wine’ but ‘hates eggs’ finds that a complete nightmare, then I (who am the opposite) should leave it alone.

She’d absolutely cooked the shit out of those eggs, though. I’d probably hate them too if I only got ‘yellow cooked until it’s a powdery dust’ as my options.

Well yeah. You barely use groups on a personal machine - maybe once and done for audio and VMs, depending on what distro you use - and at work you’d automate that shit, probably have it centralised.

Kind of. It’s the Linux kernel that manages all of the controller drivers and makes them available to userspace, mostly via the evdev interface. SDL is a library for managing graphics, sounds and events in a generic way on multiple platforms and devices. It’s overwhelmingly the most common library used for Linux games - Steam used it for all of their Linux-native ports of Source engine games, for instance. But it also presents all gamepad events in a consistent way regardless of their “true source”, so generic devices tend to work with every game.

SDL3 mostly clears out all the clutter from the previous versions of SDL. It’s a mature library and gamedev has come a long way in that time. Getting rid of all the weird stuff that the API accumulated makes it easier to use and maintain. Plus there were things like managing audio generally, and pen-and-touch gestures mobile phones and tablets, that were quite the head-scratchers before. That’s all a bit easier now.

Filesystem-as-a-db is why MongoDB is webscale. You just turn it on and it scales right up.

At least they’re fucking up their own series! So much worse when some big publishers buy out a beloved series and fucks it up properly, often never to be seen again. Could be Eidos fucking up Thief, Bethesda fucking up Fallout; EA fucking up Syndicate, Dungeon Keeper, Dead Space, SimCity, Need for Speed, Star Wars: Battlefront, Command & Conquer, Ultima and The Settlers. In fact, just fuck EA.

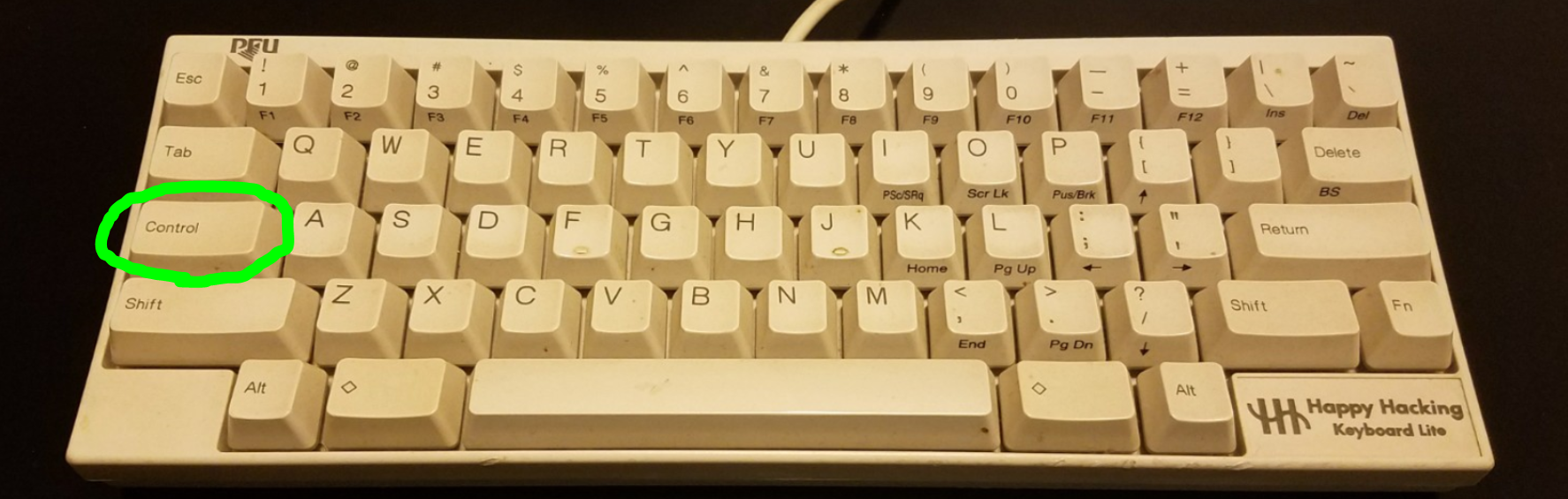

Control back in the right place… where you can press it with your left pinkie without taking your fingers off the home keys? Rather than where useless caps lock is just wasting space?

No, not quite. They’re funded by venture capitalists, who put money into investment rounds on the understanding (speculative gamble?) that the company will have a given future value. The last funding round was $6.6bn on the basis that the company will be worth $157bn when it is floated on the stock market. Ed Zitron has quite a good analysis on his page, and also why their business is a complete pile of shite:

Programming a robust global date-time system and having a transparent conversation between metric and *imperial/traditional" units is just a warm-up to show that you can work with the truly demented currency system. Make sure everything is rounded off to the nearest whole ha’penny.

Wikipedia’s entry on Z-Lib has its Tor address on it as well, so you can avoid that link too. Massive repository of textbooks and indeed books of any kind, all just available for free download. Makes me sick.

Absolutely NO sexuality explicit content. This includes, but not limited to, images/videos/chat around sexual acts. There are other places on the internet for this.

😥

Does make me think about the story of Thales of Miletus; ancient Greek philosopher, got asked what use was philosophy if it doesn’t make you any money. Predicted good weather, and monopolised all the olive presses, made a fortune.

For a modern example; shares in Rheinmetall (German firm who make, amongst other things, the turrets for tanks) have gone through the roof after the recent US debacle. I could have told you a year ago that Trump getting in would have meant the US abandoning Ukraine; obvious in hindsight that that would mean a boon for European arms manufacturers.

I don’t think you need to be quick to take advantage. I think you need insight. If there’s a topic that you’re knowledgeable about and you can see which way the wind is blowing, then you can make your own boat.

https://en.wikipedia.org/wiki/Thales_of_Miletus#Olive_presses